Real-Time Bidding in Advertising¶

Contrary to sponsored advertising, where advertisers set fixed bids, in real-time bid‐ ding (RTB) you can set a bid for every individual impression. When a user visits a website that supports ads, this triggers an auction where advertisers bid for an impres‐ sion. Advertisers must submit bids within a period of time, where 100 ms is a com‐ mon limit.

The advertising platform provides contextual and behavioral information to the advertiser for evaluation. The advertiser uses an automated algorithm to decide how much to bid based upon the context. In the long-term, the platform’s products must deliver a satisfying experience, to maintain the advertising revenue stream. But adver‐ tisers want to maximize some key performance indicator (KPI), for example, the number of impressions or click through rate (CTR), for the least cost.

RTB presents a clear action (the bid), state (the information provided by the plat‐ form) and agent (the bidding algorithm). Both platforms and advertisers can use RL to optimize for their definition of reward.

To quickly demonstrate this idea, below I present some code to simulate a bidding environment. First let me install the dependencies.

Setup¶

!pip install pygame==1.9.6 pandas==1.0.5 matplotlib==3.2.1 gym==0.17.3 gym-display-advertising==0.0.1

!pip install --upgrade git+git://github.com/david-abel/simple_rl.git@77c0d6b910efbe8bdd5f4f87337c5bc4aed0d79c

import numpy as np

import pandas as pd

from simple_rl.agents import RandomAgent, DelayedQAgent, DoubleQAgent, QLearningAgent

import gym

import gym_display_advertising

import matplotlib

import matplotlib.pyplot as plt

matplotlib.use("agg", force=True)

%matplotlib inline

Params¶

eps = 0.05

gam = 0.99

alph = 0.0001

n_episodes = 200

Utils¶

This section contains quite a lot of code. These are the helper functions to perform various aspects of the problem. For example, I need to discretize the state space so that it works with the tabular value-based algorithms.

Then there is some helper code to perform the training iteration. Loops in loops.

def state_mapping(obs):

"""

Since this is tabular, we can't use real numbers. There would be an infinite

number of states. Instead I round and convert to an integer. This is a

simple form of _tile coding_.

"""

return tuple(np.round(100 * obs[0:1]))

def run_episode(env, agent, learning=True):

episode_reward = 0

observation = env.reset()

episode_over = False

reward = 0

action_buffer = []

while not episode_over:

if hasattr(agent, "q_func"):

action = agent.act(

state_mapping(observation),

reward,

learning=learning)

else:

action = agent.act(

state_mapping(observation),

reward)

action_buffer.append(observation[0])

observation, reward, episode_over, _ = env.step(action)

episode_reward += reward

agent.end_of_episode()

return episode_reward, action_buffer

def train_agent(env, agent_func, n_repeats):

train_rewards_buffer = np.zeros((n_episodes, n_repeats))

train_bid_buffer = np.zeros((n_episodes, n_repeats, env.batch_size))

for instance in range(n_repeats):

agent = agent_func(range(env.action_space.n))

for episode in range(n_episodes):

episode_reward, action_buffer = run_episode(env, agent)

train_rewards_buffer[episode, instance] = episode_reward

train_bid_buffer[episode, instance, :] = np.pad(

action_buffer, (0, env.batch_size - len(action_buffer)),

'constant', constant_values=0)

if hasattr(agent, "q_func"):

print_arbitrary_policy(agent.q_func)

# Test

agent.epsilon = 0

episode_reward, test_bid_buffer = run_episode(env, agent, learning=False)

print(

"{}: {}".format(

"TEST",

episode_reward))

print(train_rewards_buffer.transpose())

print(test_bid_buffer)

return train_rewards_buffer, train_bid_buffer.mean(axis=2)

def average(data):

return pd.DataFrame(data.mean(axis=1))

def save(df, path):

df.to_json(path)

def print_arbitrary_policy(Q):

for state in sorted(Q.keys(), key=lambda x: (x is None, x)):

values = Q[state].items()

print("{}: {}".format(state, sorted(values)))

Agent¶

def q_agent(actions):

return QLearningAgent(

actions,

gamma=gam,

epsilon=eps,

alpha=alph,

)

def random_agent(actions):

return RandomAgent(actions)

def sarsa_agent(actions):

return SARSAAgent(actions, 999, gamma=gam, epsilon=eps, alpha=alph, )

Running the Experiment¶

Finally I’m going to run the experiments. I also print a lot of debugging so you can see the raw Q-values. I encourage you to inspect these, and investigate how this changes through learning.

name = "bidding_rl_delta_q_learning"

env_name = "StaticDisplayAdvertising-v0"

num_repeats = 10

agent = q_agent

print("Starting {}".format(name))

env = gym.make(env_name)

rewards_buffer, bid_buffer = train_agent(env, agent, num_repeats)

save(average(rewards_buffer), name + ".json")

save(average(bid_buffer), name + "_bid.json")

print("Stopping {}".format(name))

Starting bidding_rl_delta_q_learning

(0.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(1.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(2.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(3.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(4.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(5.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 1.5986184241244227e-16)]

(6.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 2.9767579532479464e-17)]

(7.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 2.1017160764932272e-12)]

(8.0,): [(0, 0.0), (1, 2.937298888795957e-16), (2, 0.0), (3, 0.0), (4, 0.0), (5, 0.0), (6, 0.0)]

(9.0,): [(0, 0.0), (1, 0), (2, 0.0), (3, 0.0), (4, 0.0), (5, 7.863725940834891e-13), (6, 0)]

(10.0,): [(0, 0.0), (1, 0.0), (2, 7.683171571207736e-20), (3, 3.385530538584227e-13), (4, 0.0), (5, 3.4611686752791275e-09), (6, 0.0)]

(11.0,): [(0, 0.0), (1, 9.558765177717925e-16), (2, 0.0), (3, 3.925213342567121e-13), (4, 1.1864155088453227e-12), (5, 1.1487337936278007e-07), (6, 2.1334634731235385e-10)]

(12.0,): [(0, 0.0), (1, 0), (2, 0.0), (3, 2.169797603618152e-09), (4, 5.238053608921087e-10), (5, 0.0), (6, 2.861207259076816e-05)]

(13.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0.0), (5, 0), (6, 0.00019348380980921889)]

(14.0,): [(0, 0), (1, 0.0), (2, 7.124021415011489e-10), (3, 0.0), (4, 1.2848041263229343e-10), (5, 1.5201707084878288e-10), (6, 0.0024657147527178034)]

(15.0,): [(0, 0.0), (1, 0.0), (2, 0), (3, 0), (4, 5.389611359550401e-06), (5, 0), (6, 0)]

(16.0,): [(0, 0.0), (1, 1.8921861445448376e-07), (2, 0), (3, 0), (4, 0), (5, 0.0), (6, 0.004610079904908892)]

(17.0,): [(0, 0.0), (1, 0.0), (2, 0.0), (3, 0.0), (4, 0), (5, 2.8823350348146305e-05), (6, 0.0006066167548883614)]

(18.0,): [(0, 1.4946323954474337e-17), (1, 6.047239739208024e-07), (2, 2.1096681193744704e-09), (3, 6.92846221865187e-08), (4, 2.58122203640065e-06), (5, 0.0060658262392547305), (6, 0)]

(19.0,): [(0, 4.440622564443755e-17), (1, 5.470419049657874e-07), (2, 1.4194100076143626e-06), (3, 3.9121732075389835e-06), (4, 0.0001001784086509009), (5, 0.025790050471078803), (6, 0.00020053623088479917)]

(20.0,): [(0, 2.5190132994218816e-12), (1, 2.3229604384118518e-05), (2, 3.899819647396959e-05), (3, 0.0009503177107922988), (4, 0.28819599250118233), (5, 0.00182065979608774), (6, 0.0022094835647729788)]

(21.0,): [(0, 4.07978856602482e-11), (1, 5.058889331997783e-05), (2, 0.15173186379013745), (3, 0.0029137067742536224), (4, 0.0031171609808562516), (5, 0.001902799298912826), (6, 0.00249768214882832)]

(22.0,): [(0, 0.0), (1, 0), (2, 0.015426124387019796), (3, 0.00010078070953049689), (4, 0.0001008141400611908), (5, 0.0002007620791866908), (6, 0)]

(23.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0.004216286889076344), (6, 0)]

(24.0,): [(0, 4.896174202132625e-10), (1, 0), (2, 0.00010007925305323805), (3, 0), (4, 0.010099827827821083), (5, 0.00010014893771066539), (6, 0.00010031667860332133)]

(25.0,): [(0, 0), (1, 0.00010009960891082659), (2, 0), (3, 0), (4, 0.0001005846869236042), (5, 0.014043641898698918), (6, 0.00020215776493054285)]

(26.0,): [(0, 0), (1, 0), (2, 0.008613994852213456), (3, 0), (4, 0.00010022929597136172), (5, 0), (6, 0.00010018904273016812)]

(27.0,): [(0, 2.6031070835389265e-07), (1, 0.0005042827601518867), (2, 0.00010010890248265988), (3, 0), (4, 0), (5, 0.0002008228932544119), (6, 0.017855431591600142)]

(28.0,): [(0, 2.2273832608930657e-06), (1, 0.0019140773652747219), (2, 0.001308096014059514), (3, 0.2182074069454753), (4, 0.0016007805238050967), (5, 0.001302907928894972), (6, 0.001010604942708029)]

(29.0,): [(0, 0), (1, 0), (2, 0.003162974819583311), (3, 0), (4, 0.00010017825314349963), (5, 0.00010008913487355903), (6, 0)]

(30.0,): [(0, 9.572112043379467e-10), (1, 0.011644321561148821), (2, 0), (3, 0.0002014589850891427), (4, 0.00020009893226886373), (5, 0.00010000003041687818), (6, 0)]

(31.0,): [(0, 2.3891165651271934e-06), (1, 0.00023600617369892116), (2, 0), (3, 0.00010017825314349963), (4, 0), (5, 0.002200472489744916), (6, 0)]

(32.0,): [(0, 0.0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0.00010000007590782745), (6, 0.0013007429751292585)]

(33.0,): [(0, 1.0906650653822107e-06), (1, 0), (2, 0), (3, 0), (4, 1.4241132538663945e-10), (5, 3.661444710925897e-07), (6, 0)]

(34.0,): [(0, 0), (1, 0.0026112081102226854), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(35.0,): [(0, 1.4983320073365203e-06), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(36.0,): [(0, 0), (1, 0.00010004950774456996), (2, 0), (3, 0.00010049466752330035), (4, 0), (5, 0), (6, 0.003997930612608225)]

(37.0,): [(0, 3.436782022501716e-06), (1, 0.00019995025428245792), (2, 0.0005997907153505415), (3, 0.058072233225188126), (4, 0.0005042449342761901), (5, 0.0009804148385238257), (6, 0.00020047619226734722)]

(38.0,): [(0, 1.3478838240934523e-06), (1, 0), (2, 0.006216906936272676), (3, 0.0001005532816692978), (4, 0.00010919964392670323), (5, 0), (6, 0.0001)]

(39.0,): [(0, 7.4393102662255105e-06), (1, 0.0001000000749269613), (2, 0.0002124685669219098), (3, 0.00020204261217701285), (4, 0.03330403969292585), (5, 0.00020006613017916548), (6, 0.00010022860280310472)]

(40.0,): [(0, 0.0019668257458606625), (1, 0.0006018072054467757), (2, 0.0012072469128189829), (3, 0.16185356405028956), (4, 0.0021994102911465866), (5, 0.0016998053928706566), (6, 0.001502311877657156)]

(41.0,): [(0, 0.0004516973448730999), (1, 0.0004115377752703198), (2, 0.035998466629186), (3, 0), (4, 0.00010002144203520524), (5, 0.00020002960781116428), (6, 0.00030030686132668475)]

(42.0,): [(0, 0.00010159003534500829), (1, 0.002409133998153044), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0.0001)]

(43.0,): [(0, 0.0006678661955772906), (1, 0.00010177238252570789), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(44.0,): [(0, 0.001511199210282052), (1, 0), (2, 0), (3, 1.980293805414213e-08), (4, 0), (5, 0), (6, 0)]

(45.0,): [(0, 0), (1, 7.172763282268792e-06), (2, 0), (3, 0), (4, 0), (5, 0.001100627986577247), (6, 0)]

(46.0,): [(0, 0), (1, 0.00010007922986307778), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(47.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0.0030010394268519184), (5, 0.0001), (6, 0)]

(48.0,): [(0, 0), (1, 9.973790352670024e-09), (2, 0), (3, 0), (4, 0.0025015773579352227), (5, 0), (6, 0)]

(49.0,): [(0, 0), (1, 0), (2, 0.00020013852521863395), (3, 0.00010013877337162042), (4, 0), (5, 0.0018033917624542984), (6, 0)]

(50.0,): [(0, 0), (1, 0), (2, 0.00439645674010904), (3, 0.0001003561657243894), (4, 0), (5, 0), (6, 0)]

(51.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0.000499959406)]

(52.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0.0002003562630508606), (5, 0), (6, 0)]

(53.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 9.902941461308811e-09), (5, 0), (6, 0)]

(54.0,): [(0, 0), (1, 0), (2, 0.0001999999039214536), (3, 0), (4, 0.000199989902960102), (5, 0.004299683736341352), (6, 0)]

(55.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0.0027080430194056375), (6, 0)]

(56.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0.00010002971173039202), (5, 0), (6, 0)]

(57.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0.00030005909100506474), (6, 0.0)]

(58.0,): [(0, 0), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0.0003999796127781386), (6, 0)]

(59.0,): [(0, 0.0024120030072631933), (1, 0.0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(60.0,): [(0, 0.00592102694493925), (1, 3.860615955335337e-07), (2, 0.00020000980390991215), (3, 5.062314493971578e-07), (4, 3.9594066132711665e-08), (5, 0), (6, 0)]

(61.0,): [(0, 0.00010002971080472114), (1, 0.001401505222238687), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(63.0,): [(0, 0.0005001769810456419), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(64.0,): [(0, 0), (1, 0), (2, 0.0003001383903819786), (3, 0), (4, 0), (5, 0), (6, 0)]

(65.0,): [(0, 0), (1, 0.00040016818652469446), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(72.0,): [(0, 0), (1, 0.0003999994196520371), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(76.0,): [(0, 0), (1, 0), (2, 0.00039999940000078814), (3, 0), (4, 0), (5, 0), (6, 0)]

(77.0,): [(0, 0), (1, 0), (2, 0), (3, 0.0012999103030588307), (4, 0.0), (5, 0), (6, 0)]

(80.0,): [(0, 1.2472586490627816e-06), (1, 0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

(85.0,): [(0, 0), (1, 0.0), (2, 0), (3, 0), (4, 0), (5, 0), (6, 0)]

TEST: 71

[[ 0. 42. 64. ... 44. 62. 54.]

[36. 65. 27. ... 81. 75. 85.]

[ 0. 39. 74. ... 45. 45. 42.]

...

[ 3. 17. 40. ... 71. 67. 58.]

[ 0. 0. 0. ... 61. 61. 40.]

[ 1. 0. 1. ... 77. 49. 0.]]

[0.2300021626161211, 0.25300237887773325, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655, 0.27830261676550655]

Stopping bidding_rl_delta_q_learning

Next I run the same code again but this time with the random agent.

name = "bidding_rl_delta_random"

env_name = "StaticDisplayAdvertising-v0"

num_repeats = 10

agent = random_agent

print("Starting {}".format(name))

env = gym.make(env_name)

rewards_buffer, bid_buffer = train_agent(env, agent, num_repeats)

save(average(rewards_buffer), name + ".json")

save(average(bid_buffer), name + "_bid.json")

print("Stopping {}".format(name))

Starting bidding_rl_delta_random

TEST: 1

[[ 0. 10. 4. ... 19. 51. 48.]

[ 0. 0. 13. ... 19. 0. 2.]

[ 0. 0. 0. ... 10. 12. 12.]

...

[ 6. 1. 1. ... 35. 14. 3.]

[ 6. 18. 12. ... 5. 25. 1.]

[ 0. 0. 0. ... 16. 0. 1.]]

[0.28246021011155464, 0.26833719960597696, 0.13416859980298848, 0.1408770297931379, 0.1267893268138241, 0.13946825949520653, 0.1534150854447272, 0.0767075427223636, 0.0383537713611818, 0.0383537713611818, 0.04027145992924089, 0.04027145992924089, 0.04027145992924089, 0.020135729964620444, 0.02114251646285147, 0.010571258231425735, 0.005285629115712867, 0.0026428145578564336, 0.003964221836784651, 0.004360644020463115, 0.003924579618416804, 0.005886869427625206, 0.005592525956243945, 0.005592525956243945, 0.005872152254056143, 0.006459367479461757, 0.006782335853434846, 0.003391167926717423, 0.003221609530381552, 0.003221609530381552, 0.002899448577343397, 0.0014497242886716984, 0.0014497242886716984, 0.002174586433007548, 0.001957127789706793, 0.0009785638948533965, 0.0008807075053680569, 0.00044035375268402843, 0.0004843891279524313, 0.0004843891279524313, 0.0004359502151571882, 0.0004359502151571882, 0.0003923551936414694, 0.0003923551936414694, 0.0001961775968207347, 0.00029426639523110203, 0.00027955307546954694, 0.00027955307546954694, 0.00029353072924302433, 0.00029353072924302433, 0.00014676536462151216, 0.00022014804693226825, 0.00011007402346613412, 9.906662111952071e-05, 4.953331055976036e-05, 7.429996583964055e-05, 8.172996242360461e-05, 7.355696618124415e-05, 6.620126956311975e-05, 9.930190434467963e-05, 4.9650952172339815e-05, 4.9650952172339815e-05, 7.447642825850972e-05, 8.19240710843607e-05, 7.373166397592463e-05, 7.741824717472087e-05, 7.354733481598483e-05, 6.986996807518558e-05, 6.637646967142629e-05, 5.973882270428367e-05, 5.973882270428367e-05, 6.272576383949784e-05, 6.586205203147275e-05, 5.927584682832547e-05, 5.3348262145492926e-05, 2.6674131072746463e-05, 2.4006717965471818e-05, 2.2806382067198227e-05, 2.394670117055814e-05, 2.1552031053502322e-05, 3.232804658025348e-05, 4.849206987038022e-05, 4.606746637686121e-05, 4.837083969570427e-05, 4.353375572613385e-05, 4.7887131298747235e-05, 7.183069694812086e-05, 7.183069694812086e-05, 7.901376664293296e-05, 3.950688332146648e-05, 4.345757165361313e-05, 6.518635748041969e-05, 9.777953622062954e-05, 8.80015825985666e-05, 4.40007912992833e-05, 4.40007912992833e-05, 2.200039564964165e-05, 1.9800356084677485e-05, 2.1780391693145236e-05, 1.0890195846572618e-05]

Stopping bidding_rl_delta_random

Results¶

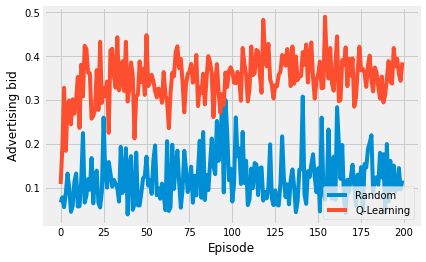

In the next two plots I present the sum of the rewards in an episode, over 200 episode, averaged over 10 runs. You’d need to perform more averaging to the the plots smoother.

You can see that the RL based agent quickly learns where to position the bid amount in order to maximize the reward.

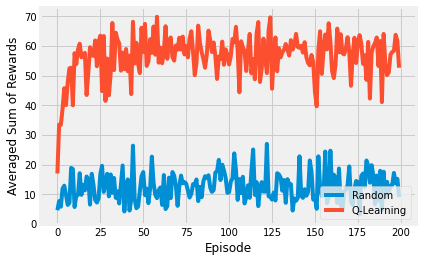

The second image shows the bid amount changes over time.

data_files = [("Random", "bidding_rl_delta_random.json"),

("Q-Learning", "bidding_rl_delta_q_learning.json")]

fig, ax = plt.subplots()

for j, (name, data_file) in enumerate(data_files):

df = pd.read_json(data_file)

x = range(len(df))

y = df.sort_index().values

ax.plot(x,

y,

linestyle='solid',

label=name)

ax.set_xlabel('Episode')

ax.set_ylabel('Averaged Sum of Rewards')

ax.legend(loc='lower right')

plt.show()

data_files = [("Random", "bidding_rl_delta_random_bid.json"),

("Q-Learning", "bidding_rl_delta_q_learning_bid.json")]

fig, ax = plt.subplots()

for j, (name, data_file) in enumerate(data_files):

df = pd.read_json(data_file)

x = range(len(df))

y = df.sort_index().values

ax.plot(x,

y,

linestyle='solid',

label=name)

ax.set_xlabel('Episode')

ax.set_ylabel('Advertising bid')

ax.legend(loc='lower right')

plt.show()